PoseSync - Robust Pose-based Video Synchronization

Picture a dancer, a guide, an athlete, or a sports enthusiast passionately honing their craft. Now, imagine having a powerful tool that can dissect their every move and place it side by side with the one performed by experts. In this blog, we will introduce you to PoseSync, a pose-based video synchronization tool developed by Infocusp Innovations. PoseSync's mission is to seamlessly align frames from multiple videos where individuals are attempting the same actions, even when timing and execution don't quite match up.

Video synchronization task, that is quite intuitive for humans, poses a number of challenges as an automated synchronization task, including:

- Pose Differences: between persons performing the action.

- Speed Difference: It would lead to the difference in the timing of action movements

- Scale Difference: depending on the distance between the person and camera and also the inherent size difference.

- Position Shifts: Shift in the position of persons within the frame.

How PoseSync Works?

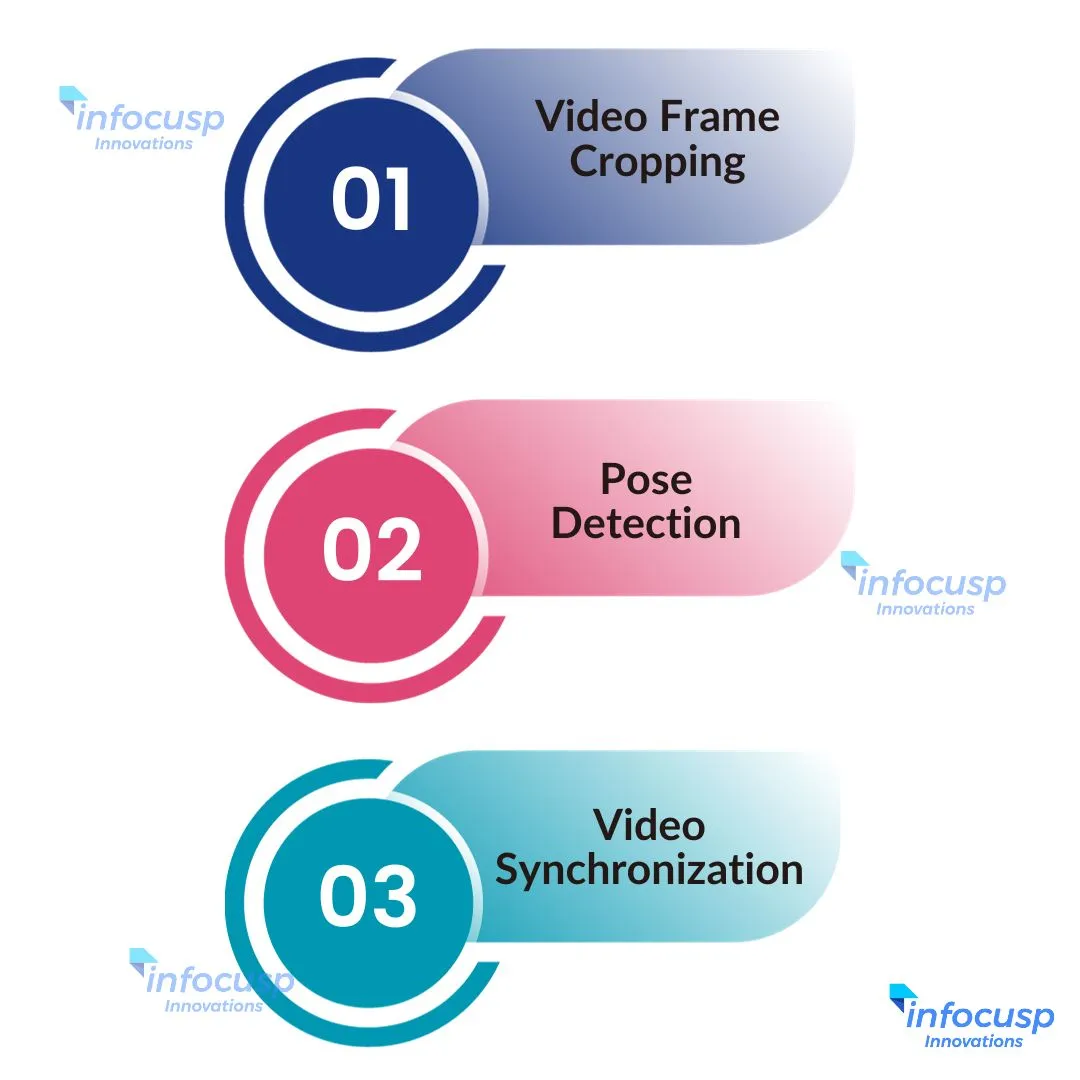

We introduced a tool named “PoseSync” that synchronizes any two videos by bringing them in sync using the state-of-the-art models at its backend for performing pose estimation and matching the poses. It consists of three stages:

- Video Frame Cropping: It begins by cropping video frames using YOLO v5, which enhances the accuracy of pose detection by removing background noise and other individuals in the frame.

- Pose Detection/Estimation: The cropped frames are then processed using MoveNet, a state-of-the-art pose detection model that returns key points for each frame.

- Video Synchronization (Dynamic Time Warping - DTW): To synchronize the two videos, PoseSync employs Dynamic Time Warping (DTW), a versatile algorithm originally designed for speech recognition. To address size differences between poses, PoseSync introduces an Angle-Mean Absolute Error metric that computes the Mean Absolute Error (MAE) between the angles of key skeleton joints. This metric remains invariant to scale, position, and angle changes in the pose.

As shown in the figure below, it takes two video clips as input, which are processed with an object detection tool to crop the frames, leading to more accurate pose estimation. Once pose estimation is done on both the videos, the poses are passed to the temporal alignment algorithm, DTW for synchronization of similar sequences of actions.

With PoseSync, the world of performance evaluation has become more accessible and insightful. This tool aligns frames from multiple videos, providing a close-up view of individuals attempting the same actions. Whether you're a dance enthusiast, an outdoor adventurer, a sports fanatic, or simply someone curious about the art of improvement, PoseSync has the potential to revolutionize skill development.

Explore PoseSync

- Read the full research paper on arXiv for a detailed understanding of the approach.

- Try out the PoseSync demo on HuggingFace and share your results with us.

- Access the Python implementation of the algorithm on GitHub for practical use and contributions.

References

Conclusion

Whether your pursuit is dancing with grace, navigating the unknown, excelling in sports, or simply striving for excellence in any field, PoseSync can be your beacon of inspiration and guidance on your path to greatness.